Navigating the Landscape of Large Language Models

Benchmarks, Legal Use Cases, and Future Directions

This article first appeared on LinkedIn in a blog by OpenNyAI. You can read the original here.

Dive into the evolving legal artificial intelligence (AI) landscape as we explore the nuanced performance of Legal Language Models (LLMs) in the Indian legal sphere. In this piece, we dissect many legal tasks— ranging from document review to litigation prediction—where LLMs have shown promise. Our aim? To shed light on the opportunities, learnings, and potential strategies to optimize training LLMs to better navigate the labyrinthine corridors of Indian legal doctrine and practice.

1. Legal Tasks

Introduction to common legal tasks

Legal AI tasks are a set of activities in carrying out a legal procedure which can range from providing legal advice, a semantic search of judgments, sentiment analysis of precedents, etc. While this may sound like specialized versions of general NLP tasks, the nuance offered by the legal text allows specific approaches in text retrieval, augmentation, and generation tasks.

Some common Legal NLP tasks are Information Extraction, Summarization, Case Similarity Identification, Reasoning Based, and Generative Tasks. These can be defined further, for example, Named Entity Recognition and Rhetorical Roles Identification.

A survey paper from 2021 on the benchmarks needed for Indian Legal NLP shows a glimpse of legal tasks and their classification:

Having access to foundational models capable of performing general Natural Language Understanding tasks may still require curated data for domain fine-tuning. Our initial exploration suggests that available GPT models do not perform up to the desired levels in the Indian legal domain. Thus, many downstream Legal AI tasks would require a well-trained pre-trained foundational model and instruction fine-tuning to be helpful. We need the model and underlying fine-tuning data to be built openly for high-stakes areas such as law and justice.

Large Language Models (LLMs), though they can be used to do the above tasks, we believe that the economics of running more specialized models for a subset of tasks and using LLMs for higher-level NLP tasks would provide the best combination in the NLP pipelines for the legal domain. In the following sections, we describe the results from our experiments on using GPT to perform different legal tasks. Then we describe some datasets and tasks that we believe would add immediate value to the legal ecosystem.

2. Sample Task Descriptions

Here are some examples of legal tasks and the data associated with them.

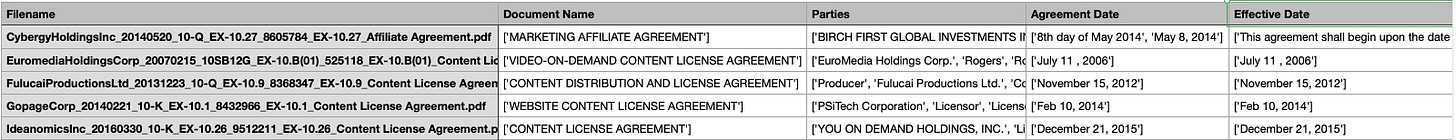

2.1 Contract Understanding using CUAD Data

The final data looks as provided below—

This dataset allows us to build AI models that can extract this information automatically and highlight which contract sections need to be reviewed by lawyers.

2.2. Contract Natural Language Inference

A Natural Language Inference task aims to look at a hypothesis and a premise and decide if there is entailment or contradiction among them. In the contracts NLI task, a system is given a set of hypotheses (such as “Some obligations of Agreement may survive termination.”) and a contract, and it is asked to classify whether each hypothesis is entailed by, contradicting to, or not mentioned by (neutral to) the contract as well as identifying evidence for the decision as spans in the contract.

2.3 Legal Issue Spotting

The goal of learned hands data is to spot the category of legal issues in Reddit posts of laypeople asking for legal help. Legal categories are predefined using LIST taxonomy.

Legal experts are shown a situation and asked if they see a particular type of legal category in that situation or not. An example is shown below.

3. Performance of LLMs on Legal Tasks

The performance of LLMs can be assessed using standard law exams in India & USA.

3.1. Law Exams

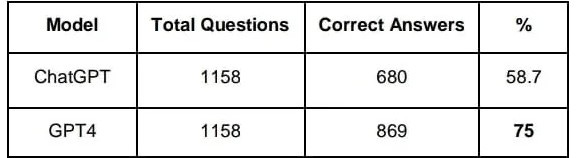

3.1.1. All India Bar Examination Performance

We studied the performance of OpenAI models on All India Bar Examinations questions for the past 12 years. The scanned question papers with answer keys were converted to text using optical character recognition. This data was manually verified for correctness. The questions are multiple-choice questions from various areas of law. The minimum passing percentage is 40%. We tested ChatGPT and GPT4 models on these questions and found that both models passed the AIBE exam well above the passing marks. Although most of the AIBE questions were recall based, the models could also answer reasoning questions well.

3.1.2. USA Law Exams

Some studies have been undertaken to study the performance of openAI models on legal tasks in the US. “GPT4 Passes Bar Exam” shows that GPT4 passes the US Uniform Bar Exam (multiple choice questions and two written exams) with flying colours, which is better than average student scores. “ChatGPT Goes to Law School” says that ChatGPT performed on average at the level of a C+ student, achieving a low but passing grade in all four courses at the University of Minnesota Law School.

3.2. Observations from Past Studies

Based on the observations from these papers and other literature, here are some observations

3.2.1. LLMs are good at what tasks?

Current LLMs are very good at conversational abilities and general reasoning tasks. They can have coherent conversations with users and answer questions. Strong results on bar exams in the US and India indicate that OpenAI LLMs are good at academic legal tasks. LLMs are very good at summarizing legal doctrine, facts, and holdings. LLMs can also generate relevant precedents in the US context. LLMs can also provide good explanations for their predictions. LLMs have shown good performance on zero or few-shot classification tasks where tasks are to classify a text into a few predefined categories.

3.2.2. LLMs need to be better at what tasks?

Most state-of-the-art generative LLMs (specifically chatGPT and GPT-4) could be better at following tasks.

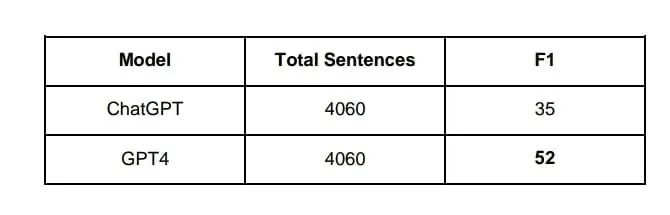

Information Extraction: Extract relevant information from a given set of documents. Most LLMs struggle to extract named entities from text, like names of statutes, precedents, parties involved, etc. Specific models which are fine-tuned for such tasks perform much better than LLMs.

E.g. In extracting legal named entities, we found that ChatGPT performs much poorly compared to the fine-tuned model.

Access to the latest information: Most LLMs have a cut-off date for the information they have seen. And since statutes and case laws keep on evolving, it is essential to have access to up-to-date information. Retrieval Augmented Systems seem to be a good approach. Autonomous agents with the ability to search on the internet could work well; however, there might be challenges concerning separating out noise from the signal.

Spotting legal issues: Identifying legal issues in a given situation is a core legal task. Previous studies have shown that ChatGPT can miss spotting important legal issues given a situation, especially while using an open-ended prompt.

Application of Rules to Facts: The generation of essays or arguments bases that involve assessing specific cases, theories, and doctrines is a challenging task for which LLMs need improvements.

Hallucinations: LLMs are known for providing incorrect information confidently. It is challenging to separate incorrect information from the correct ones.

Math calculations: An external tool is necessary for tax matters or calculations of awards or punishments. LLMs don’t have an inherent capability to calculate as they are probabilistic models, but connecting them with external tools seems to be the right way. However, building the agents is non-trivial for legal tasks and may require a tree of thoughts or even a bank of thoughts approach.

Application of rules that vary across jurisdictions: LLMs are better at applying uniform legal rules than the ones that vary across jurisdictions. This may require fine-tuning and RAG systems to achieve good results. This also begs the question of what data is available to test out the performance of these systems in various geographies.

4. Role of the Community in Contributing to it

In summary, the open-source movement is no longer a luxury or a fringe philosophy; it’s necessary for the robust and ethical development of AI technologies. It is through open source that we can ensure, in high-stakes use cases such as Law and Justice, data audibility, preventing misuse and facilitating accountability. It brings a level of transparency that is essential to prevent ‘black box’ scenarios, allowing us to understand how decisions are made and algorithms are working. Open source also fosters an environment of open innovation, where diverse perspectives from around the globe can contribute and collaboratively shape the trajectory of AI. Therefore, we mustn’t merely witness this evolution; we should actively participate in it. Engaging with open-source initiatives today is our best defence against being taken by surprise by AI in the future. This involvement ensures that we are not just passive consumers but active contributors to shaping an equitable, transparent, and accountable AI-powered future.

References

Benchmarks for Indian Legal NLP: A Survey [link]

Legal Natural Language Processing Resources [link]

Natural Language Processing in the Legal Domain[link]

Old paper on NLP in Law [link]

Evaluating ChatGPT’s Information Extraction Capabilities: An Assessment of Performance, Explainability, Calibration, and Faithfulness [link]